The jagged reality of AI Adoption in 2025

And looking ahead to building leadership confidence in 2026

Over the past year we’ve been privileged to support over 60 organisations on their AI journey. It is clear that how organisations adopt, and adapt to AI technologies is very jagged.

Wharton Busines School professor Ethan Mollick coined the term “Jagged Frontier” to describe a core reality of today’s AI systems: they can be astonishingly capable in one moment, and unexpectedly unreliable the next.

We see the same unevenness playing out across organisations - not just in tools, but in emotions, teams, leadership, and the wider social impact.

Jagged technology: High ceiling, low flaws

The capability of the frontier generative AI tools has grown phenomenally over the course of the year, with highly powerful reasoning models, deep research, and seriously good image and video capability, coupled with the emergence of AI Agents. The ceiling of their capability is high - but the technology still exhibits a number of flaws when working on the most simple of tasks.

Teams can see genuine productivity gains in one area, then hit sharp limitations elsewhere: inconsistent outputs, unreliable reasoning, lack of transparency and risks around bias, all make for a tension between what the tools can do and what it takes to use them responsibly.

Knowing how to choose the ‘right tool for the right job’ has been increasingly important. Helping teams to take conscious decisions about which AI model to use has become an increasingly important feature of our work.

Jagged individuals: Emotions abound

AI technologies elicit a broad range of emotions, and we’ve noticed these become far more polarised over the course of the year.

More and more, we’re walking into rooms where people hold strong, conflicting views — excitement, fear, scepticism, fatigue, cynicism, hope — often all at once. Those emotions shape whether people try tools, avoid them, misuse them, or adopt them without support.

This isn’t about forcing positivity. Practical training and instruction to 'use AI' is not enough. Leaders need to create enough psychological safety for honest conversation.

There is absolutely a need for individual learning journies, but the importance of teams exploring working with AI together cannot be under-estimated. We've intentionally built in more reflection and conversation time into our 'Experimenting Safely', and ensure that our team have strong coaching backgrounds to be able to hold powerful conversations.

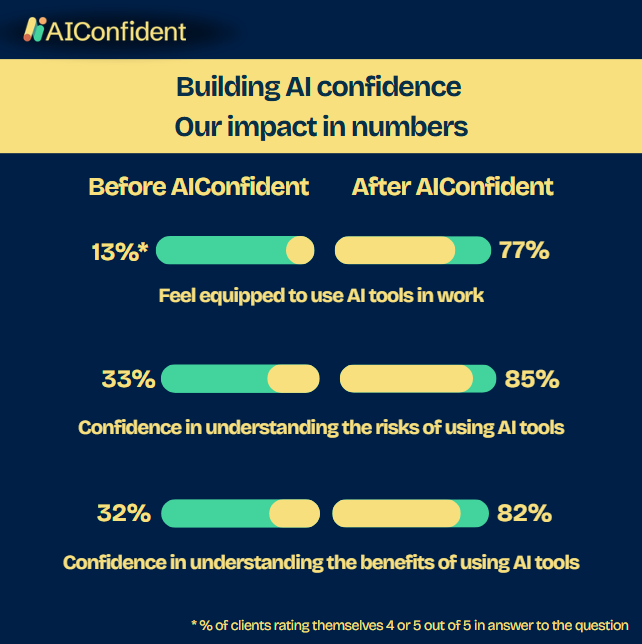

We've seen results with our work - a simple analysis of the sentiment of organisations before and after working with us shows a marked shift, but more needs to be done.

Jagged organisational adoption

Some organisations have clear leadership messaging, rolled out safe tools, put a workable policy in place, and invested in training that people can actually use. Others are still stuck at the start: uncertainty about what’s allowed, fragmented ownership, unclear governance, and a sense of “we should be doing something” without a shared plan.

And even where the basics exist, they don’t always translate into practice. A policy can be technically correct and still fail if it’s hard to interpret, hard to access, or disconnected from real day-to-day work.

Those who are succeeding are turning their intent into consistent behaviour, creating the conditions that allow people to innovate safely and responsibly.

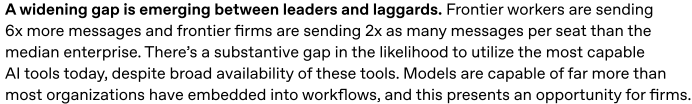

Don’t just take our word for it either. OpenAI, who have direct insight into how organisations are using ChatGPT, said this in their recent ‘State of Enterprise AI’ paper:

Jagged leadership

This is where everything else gets amplified.

Some leaders still frame AI primarily as a cost-saving or efficiency story, missing the bigger shifts now unfolding: changes to operating models, service delivery, stakeholder expectations, and (for mission-led organisations) how impact is created.

Other leaders are making time to explore how these technologies might change their mission or value proposition - and what responsible use means for their people and their communities. The most exciting organisations we’ve worked with are starting to drive systemic change: stepping into their role as shapers of culture, norms and public understanding, not just implementers of tools.

This is the leadership move we care about most: from reacting to the hype, to consciously shaping the direction of their organisation, while building the confidence in their teams to make deliberate choices, even when the future is uncertain.

Jagged social impact

Finally, there’s a layer of jaggedness beyond any single organisation: the uneven and increasingly visible social impacts of AI.

Some of the harms many of us have been concerned about for a while are no longer hypothetical. We’re seeing clearer signals — and, in some cases, lived experience emerge in areas like: Jobs and entry-level pathways; Mental health, particularly for young people; and how information is shared, consumed and trusted.

For leaders, this matters because organisational adoption doesn’t happen in a vacuum. It is why we always talk about adaptation as well as adoption. The choices we make inside organisations shape the world outside them - and our external environment, in turn, shapes what’s possible internally. If we want a future we can stand behind, we need leaders who are willing to treat social impact as part of the strategy, not an afterthought.

The gap is widening — and this is where confidence matters

Like the technology itself — where the state of the art has moved on quickly — the gap is widening at every other level too: individual, organisational, leadership, and the broader social and economic impact.

Which is why, we are steadfast in our commitment view that the leadership buy-in, capacity, and confidence are what is required to shape the future we want rather than simply inherit it.

Real confidence is built purposefully. It comes from being clear about why AI technologies are important to your mission, holding space for difficult conversations, creating easy to understand governance, and giving teams the confidence to explore and innovate safely.

This year, we have helped over 60 organisations move from hype & paralysis to clear, responsible action, by:

- Building absolute confidence: Creating spaces for powerful conversations that enabled teams to share their views and move past the hype - making them ready to engage.

- Focusing on strategy and purpose: Helping leaders get clarity on how AI connects with organisational strategy and mission, so adoption is intentional rather than accidental.

- Creating cultures of safe innovation: Putting governance frameworks in place that enabled teams to trial tools responsibly and in ways aligned with their values.

- Being future focused: Sharing visions of an AI-enabled future, exploring the broader implications of AI use (including by others), and the systemic changes that are coming, enabling leaders to take long term decisions with confidence.

The results speak for themselves, but there's plenty more to be done....

Looking Ahead to 2026

Early in the New Year we’ll set out the things that we think it’s worth keeping an eye on in 2026. It’s fairly inevitable that this list will include:

- Agents (and how we govern them): moving from “assistive tools” to systems that take actions — and the governance that must come with that.

- The growing utility of non-frontier and open-source models: particularly for community-based organisations who need affordability, control and context-specific solutions.

- Moving from “we rolled out Copilot” to genuinely ambitious use: deeper workflow redesign, new operating rhythms, and more meaningful transformation.

- Alignment, as people start to pay more attention to the values encoded in the AI models that they use and how these align with their own organisational values.

Clients often want to know where they are at in relation to their peers in terms of AI adoption, and this is a hard question to answer. On the one hand, it's OK and understandable to still be at the foothills. But on the other hand, this moment is now urgent: the pace is accelerating, and the gap is widening. Taking the first steps and opening the conversation in your leadership team and beyond is important.

Prioritising you as leaders

In 2026 we'll be re-doubling our focus on supporting leaders to build organisations that adopt, and adapt to AI technologies with confidence.

As well as continuing our work with whole organisations, we’ll be launching new programmes to build leadership capacity, focused on:

Directly supporting AI Leads and Champions inside organisations

These are the people doing the day-to-day heavy lifting of culture change: building guardrails, answering questions, running training, shifting norms, and turning uncertainty into momentum — often alongside their “real job”.

We’re launching new cohort-based support for AI Leads/Champions at different stages. The focus is practical: building confidence and producing shareable artefacts that help you to actually implement change.

Supporting transformational leaders driving systemic change

Alongside organisational adoption, we’re also building deeper support for leaders who want to respond to this moment at a systemic level — thinking beyond internal use cases to the wider societal implications, and acting to shape outcomes.

This will include a mix of cohort-based experiences and more bespoke 1:1 support. We’ll share more on this soon.

A quick ask: tell us what support you need

Being the AI lead in your organisation can be an incredibly fulfilling and exciting position. A potentially career changing opportunity. But we know these roles can be difficult, come with a heavy burden and little support, so we’re putting together some programmes just for you lucky AI leads!

We’re gathering insights from AI leads across SMEs and not-for-profits to understand the barriers you’re facing and the support you need, so that we can perfect a combination of expert coaching and guidance, cutting-edge tools and methodologies, and peer support from AI leads in organisations just like yours. All designed to get you racing forward with your AI change journey.

If this resonates, help us shape exactly what this support should look like by answering just 3 questions, and put your name down for a further 10% off our introductory price.

--

Enjoy our content? Why not sign up to our Newsletter by filling out the fields below...

Image: Jamillah Knowles & Digit / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/