Mind your language

Why the words we use about AI technologies shape how we control them

Mind your language

Why the words we use about AI technologies shape how we control them.

How we talk about AI technologies directly influences how much choice we have over the our future. Too often, we describe ‘AI’ as though it is an independent force, something unstoppable and inevitable. In reality, from the point at which an AI tool is developed, right through to its use, there are a set of human decisions. Many of which we have either direct control over, or an influence over.

The window to exercise this control and influence is narrowing. Leaders across society need to build confidence in their understanding of these technologies in order to make conscious, informed decisions about the role that we want them to play.

Every time we say something like “AI is changing education” or “AI will destroy creativity” we erase the people behind those outcomes, and surrender a bit of that control.

Changing it up

Think about the kinds of AI headlines we see every day. Now imagine how they sound when we reframe them around the human decisions that got us there:

- Rather than “Will AI make us happier?” why not ask “How can we use AI in ways that support happiness and avoid uses that make us unhappy?”

- Instead of “AI is preventing children from learning.” how about “The ways children are using AI tools are reducing their opportunities for learning. Have we given them enough support?”

- All the time we hear “AI is taking jobs.” when what is really meant is “Employers are choosing to restructure work in ways that cut jobs rather than drive growth”

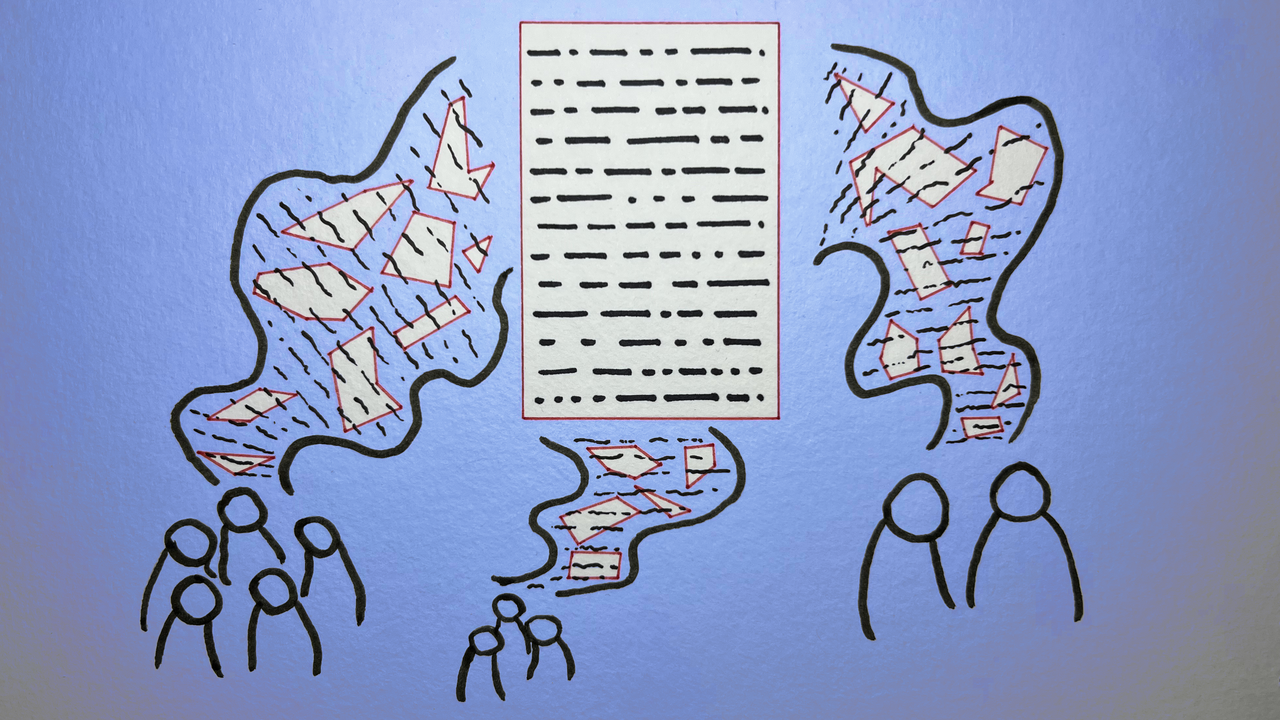

The opportunities to intervene

Every AI system is shaped by people. At each stage, human choices determine the outcomes these technologies have on society. Understanding these decisions helps us see where influence truly sits, and where confident leadership makes the biggest difference.

I’ve picked just four of the many decision points to emphasise this, starting with those closest to us and working up to the hardest to influence.

1. Personal decisions — how each of us chooses to use AI

This is the easiest level to influence because it’s where we each have direct control. Every day we make choices about whether and how to use AI tools. Drafting an email, planning a trip, checking facts, or using social media.

When do we trust them? When do we challenge them? When do we decide not to use them at all? Sometimes, using AI saves time; other times, it takes away opportunities to learn or connect.

Confidence here comes from understanding both the benefits and the limits of these tools, and using them intentionally. Education and training play a vital role. We can’t expect people to use AI technologies wisely if they don’t understand how they work or what they’re good for.

2. Adoption decisions — how organisations choose to use AI

At the organisational level, leaders decide how AI technologies fit into their work and culture.

The most confident leaders ask: Where does it genuinely help? Where must people stay in the loop? What are the uniquely human strengths we want to protect?

But many feel pressured to “do something with AI”. True confidence means knowing when not to use it. Leaders can also influence how their teams and stakeholders use AI, by setting clear expectations—for example, in recruitment, communication, or service delivery.

The most effective approach combines policy, values and training to create a culture of safe experimentation. Clear communication about purpose and boundaries helps everyone make better choices. And leaders always have a choice about whether to use AI to replace people, or to empower their people to do more.

3. Governance decisions — shaping the rules, not waiting for them

Governments, standards bodies and major institutions set the rules for how AI technologies are released and controlled. For example, they decide which types of AI approaches can be used in recruitment, education, or healthcare.

For four years I lived this challenge. Setting national level AI policy and governance, was not easy. Technology moves faster than regulation, governance and standards can keep up. General-purpose tools can be both incredibly helpful and deeply harmful depending on how they’re used, which makes them hard to regulate. But these rules aren’t out of our hands.

We can influence them by engaging in consultations, responding to calls for evidence, advocating for proportionate safeguards, and demanding accountability. Those working with young people or vulnerable groups can lobby for safeguards and standards that protect the people their organisations exist to serve.

Most importantly, we can refuse the idea of inevitability. Governance is a human design choice. Confidence at this level means being vocal, visible and values-driven, showing policymakers that society expects AI technologies to serve the public good, not the other way around.

4. Design decisions — what kind of AI we create and demand

This is the hardest level to influence, but not impossible. Design decisions set the intent behind AI technologies. Developers, investors and researchers decide:

- What problems the model is built to solve

- What data it is trained on—and whose voices are included

- How the model behaves when prompted

- What safety measures are built in before release

Every downstream effect starts here. The social values reflected - or ignored - at this stage influence everything that follows.

We can shape design indirectly through what we buy, invest in, and endorse. We can choose tools and suppliers that align with our values. Not every task requires a powerful frontier model. By selecting systems trained on data we trust, that are tested for safety, and transparent about their limits, it is possible to take conscious choices about the values that the AI tools we use are aligned with.

Confidence here means knowing what questions to ask of developers and vendors, and what standards to expect.

This couldn’t be more urgent

From the outset, our mission at AIConfident has been to equip leaders across society - particularly those who have previously not had reason to engage with AI technologies - with the confidence to shape how AI technologies are developed, governed and used.

This mission is now urgent. More and more powerful AI technologies are becoming deeply integrated into our daily lives. We need leaders, deep experts in their own domains, to influence and shape a future where AI technologies have a genuinely positive impact on society.

An important start to that mission is using language which empowers people to have a say in how AI technologies are integrated into society.

--

If you are a leader and want to know how you can shape the way AI technologies impact society, hit 'Enquire now' and get in touch

--

Image Credit: Yasmine Boudiaf & LOTI / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/